- 1. Adversarial Examples: An Emerging Threat to Online Security

- 2. Attack Vectors in the Physical World

- 3. Limitations of Current Machine Learning Models

- 4. The Importance of Adversarial Training

- 5. Transferability of Adversarial Examples

- 6. Physical World Adversarial Examples: Practical Implications

- 7. The Role of Robust Feature Engineering

- 8. Adversarial Examples: A Call for Collaboration

- 9. Real-World Adversarial Examples: A Reality Check

- 10. The Importance of Sandbox Environments

- 11. The Role of Explainable AI

- 12. The Need for Adversarial Detection Systems

- 13. Regular Security Audits and Penetration Testing

- 14. Ethical Considerations in Adversarial Attacks

- 15. Ongoing Research and Development

- 1. What are adversarial examples in the physical world?

- 2. How do adversarial examples impact online advertising?

- 3. Can adversarial examples affect ad targeting?

- 4. Are there any known instances of adversarial attacks on online advertising?

- 5. How can online advertising platforms defend against adversarial examples?

- 6. Can adversarial attacks impact the performance of digital marketing campaigns?

- 7. How can advertisers protect their campaigns against adversarial examples?

- 8. Can advertisers detect adversarial attacks on their campaigns?

- 9. What steps can advertisers take to report adversarial attacks?

- 10. Do adversarial attacks only target large advertising networks?

- 11. Can adversarial examples be used to inflate ad click-through rates?

- 12. What role does machine learning play in mitigating adversarial attacks?

- 13. How can advertisers ensure their ad placements are not compromised?

- 14. Are there any regulations in place to address adversarial attacks in advertising?

- 15. How important is collaboration among advertisers and platforms in combating adversarial attacks?

- Conclusion

AdversarialExamples In The Physical World

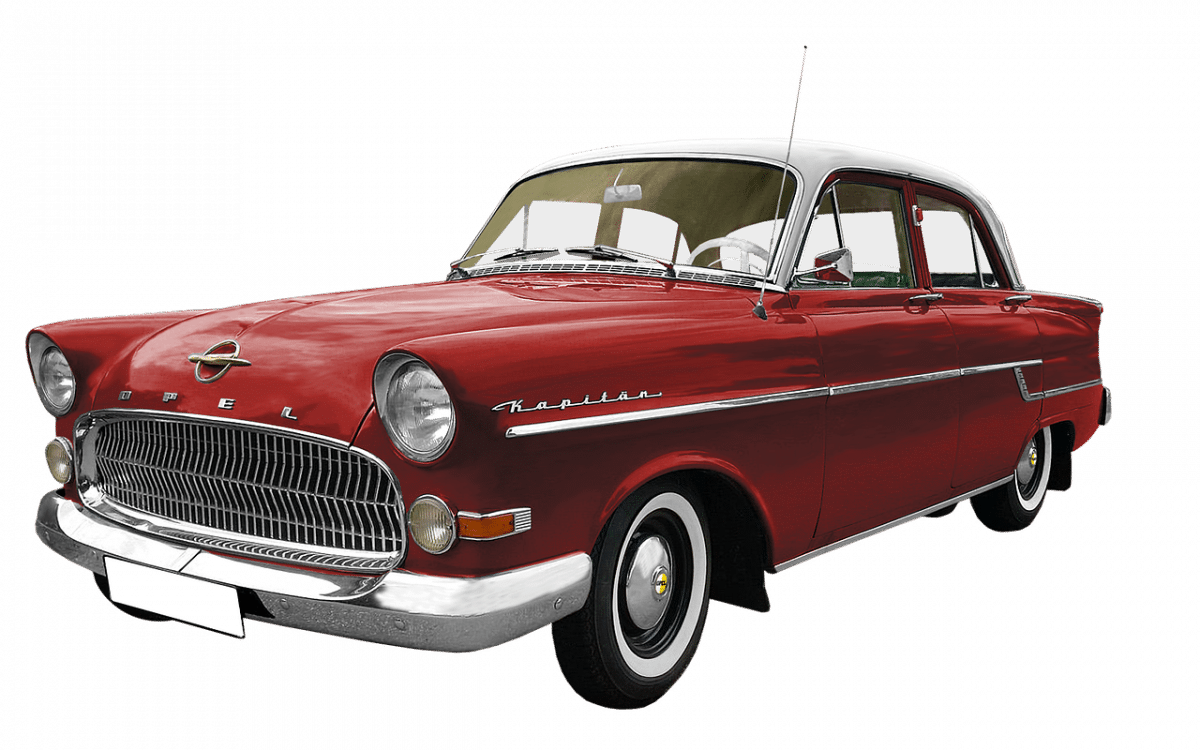

Did you know that a simple sticker on a stop sign can fool an autonomous vehicle into misinterpreting it as a speed limit sign? This intriguing phenomenon is known as adversarialexamples in the physical world, where seemingly harmless modifications to objects can deceive advanced machine learning models. With the growing reliance on artificial intelligence (AI) in various industries, understanding and addressing this issue becomes crucial.

Adversarialexamples in the physical world are not a recent discovery. In fact, the concept dates back to 2013 when researchers started exploring vulnerabilities in machine learning algorithms. Initially, these adversarial examples were limited to digital images, where carefully crafted modifications could cause misclassification. However, as autonomous systems began to integrate into everyday life, researchers expanded their focus to include physical objects.

The significance of adversarial examples in the physical world cannot be understated. Consider this: by making subtle tweaks to a street sign or adding inconspicuous patterns to an object, attackers can exploit the flaws in AI systems. This poses a serious risk as autonomous vehicles, security cameras, and other AI-driven technologies rely on visual perception. As a result, these malicious modifications could potentially lead to accidents, false identification, or other dangerous consequences.

To put things into perspective, a recent study conducted by a team of researchers investigated the vulnerability of image recognition systems used in autonomous driving. Shockingly, they found that by adding just a small and strategically placed sticker to a stop sign, they could manipulate the AI system to misclassify it. This experiment revealed the potential dangers of adversarial examples, urging the need for a robust defense mechanism.

As the race to develop AI-driven technologies intensifies, the issue of adversarial examples in the physical world necessitates immediate attention. Various methods have been proposed to address this challenge, including adversarial training, which involves generating adversarial examples during the training process to improve the model’s robustness. Additionally, researchers are exploring the use of diverse training datasets, as exposure to a wider range of adversarial examples during training can enhance the system’s ability to generalize.

While these solutions show promise, finding a foolproof defense against adversarial examples remains an ongoing endeavor. With the stakes so high, it is crucial for organizations involved in online advertising, advertising networks, online marketing, and digital marketing to be aware of this issue. As AI becomes deeply intertwined with our lives, safeguarding these systems against real-world adversarial attacks becomes paramount. Only through continuous research, innovation, and collaboration can we hope to overcome this challenge and create a more secure AI-driven future.

Key Takeaways: Adversarial Examples In The Physical World

As an online advertising service, advertising network, or digital marketing expert, understanding the concept of adversarial examples in the physical world is crucial. Adversarial examples refer to inputs deliberately designed to mislead machine learning models, and their existence poses a significant threat to online security and trust. This article will outline the key takeaways related to this topic, helping you navigate the challenges and mitigate the risks associated with adversarial attacks.

| Item | Details |

|---|---|

| Topic | Adversarial Examples In The Physical World |

| Category | Online marketing |

| Key takeaway | Adversarial Examples In The Physical World Did you know that a simple sticker on a stop sign can fool an autonomous vehicle into misinterpreting it as a speed limit sign? This intr |

| Last updated | December 30, 2025 |

1. Adversarial Examples: An Emerging Threat to Online Security

Adversarial examples have proven to be an emerging threat in the world of online security. These malicious inputs can mislead machine learning models, leading to incorrect predictions and potentially serious consequences, such as incorrect ad targeting or compromised user data.

2. Attack Vectors in the Physical World

While adversarial attacks are commonly associated with digital inputs, they can also occur in the physical world. Attack vectors in the physical world include manipulated objects, stickers, or even subtle modifications that can fool machine learning models. Adversarial examples in the physical world demonstrate the need for robust defense mechanisms to safeguard online advertising campaigns and user data from potential threats.

3. Limitations of Current Machine Learning Models

One key takeaway is the inherent vulnerability of current machine learning models to adversarial examples. Despite their impressive performance in various applications, these models can be easily fooled by carefully crafted inputs. This vulnerability calls for more robust and adaptive algorithms that can better defend against adversarial attacks.

4. The Importance of Adversarial Training

Adversarial training is a crucial defense mechanism against adversarial examples. By incorporating adversarial samples during model training, machine learning models become more resilient to potential attacks from adversarial inputs. Incorporating adversarial training techniques into your online advertising campaigns can enhance their security and trustworthiness.

5. Transferability of Adversarial Examples

One significant insight is the transferability of adversarial examples across different machine learning models. This means that an adversarial example crafted to fool one model can often deceive multiple other models. This poses a greater threat for online advertising services and advertising networks, as the same adversarial example can potentially mislead multiple systems simultaneously.

6. Physical World Adversarial Examples: Practical Implications

Understanding the practical implications of physical world adversarial examples is crucial. By studying the effectiveness of these attacks, advertisers and marketers can better comprehend the potential risks associated with online campaigns. This knowledge can guide the development of more secure and resilient advertising strategies.

7. The Role of Robust Feature Engineering

Robust feature engineering is an essential aspect of defending against adversarial examples. By carefully selecting and engineering features, machine learning models can better distinguish genuine inputs from adversarial ones. This emphasizes the importance of continuously improving feature engineering practices within the online advertising industry.

8. Adversarial Examples: A Call for Collaboration

Addressing the threat of adversarial examples requires collaboration among various stakeholders. Advertisers, marketers, advertising networks, and machine learning experts must work together to develop robust defense mechanisms and share best practices for mitigating the risks associated with adversarial attacks.

9. Real-World Adversarial Examples: A Reality Check

Real-world adversarial examples highlight the need for continuous evaluation and testing of machine learning models. Simulating real-world scenarios and evaluating model performance against adversarial attacks can help identify vulnerabilities and drive improvements in online advertising strategies.

10. The Importance of Sandbox Environments

Creating and deploying models in sandbox environments is crucial for analyzing the behavior of machine learning models against adversarial examples. Sandboxing allows advertisers and marketers to assess the vulnerability of their systems, test different defense mechanisms, and ensure that their online advertising campaigns are resilient against potential attacks.

11. The Role of Explainable AI

Explainable AI plays a critical role in defending against adversarial examples. By providing insights into the decision-making process of machine learning models, explainable AI techniques can identify potential vulnerabilities and assist in the development of more robust defenses. Incorporating explainable AI in the online advertising industry can enhance transparency and build trust with users.

12. The Need for Adversarial Detection Systems

The existence of adversarial examples necessitates the development of effective adversarial detection systems. These systems should be capable of identifying potential attacks, distinguishing between genuine and adversarial inputs, and triggering appropriate security measures. Investing in such detection systems can fortify the security of online advertising services.

13. Regular Security Audits and Penetration Testing

Regular security audits and penetration testing are essential to identify vulnerabilities in online advertising systems. By simulating adversarial attacks and evaluating the resilience of their models, advertisers and marketers can proactively address potential weaknesses and prevent malicious exploitation of their campaigns.

14. Ethical Considerations in Adversarial Attacks

Adversarial attacks raise ethical concerns regarding the misuse of machine learning models. Advertisers and marketers must exercise responsible use of machine learning techniques and be aware of the potential harm that adversarial examples can cause. Upholding ethical practices in the online advertising industry is crucial in maintaining trust and credibility with users.

15. Ongoing Research and Development

Adversarial examples in the physical world continue to evolve, emphasizing the need for ongoing research and development. Advertisers, marketers, and online advertising professionals must stay updated with the latest advancements in adversarial defense mechanisms, continuously improving their strategies to counter emerging threats.

By understanding these key takeaways, you can protect your online advertising campaigns, mitigate risks, and build trust with your users. Adversarial examples pose a growing challenge, but with the right knowledge and proactive measures, you can ensure the security and reliability of your digital marketing efforts.

Adversarial Examples In The Physical World FAQ

1. What are adversarial examples in the physical world?

Adversarial examples in the physical world refer to manipulated images, objects, or physical attributes that are designed to deceive machine learning systems. These examples can cause AI algorithms to misclassify or interpret them incorrectly.

2. How do adversarial examples impact online advertising?

Adversarial examples pose a threat to online advertising as they can be used to manipulate the algorithms that determine ad placements, targeting, and optimization. Attackers might exploit vulnerabilities in the system to force ads to undesired locations, misrepresent products, or target specific groups maliciously.

3. Can adversarial examples affect ad targeting?

Yes, adversarial examples can affect ad targeting. By crafting deceptive images or input data, attackers can confuse the targeting algorithms and manipulate the demographic or contextual targeting of ads. This can result in ads being delivered to irrelevant audiences or the wrong platforms.

4. Are there any known instances of adversarial attacks on online advertising?

While specific cases may not be widely reported due to confidentiality concerns, there have been instances of adversarial attacks on online advertising systems. These attacks often aim at exploiting vulnerabilities in ad serving platforms, programmatic advertising algorithms, or targeting mechanisms.

5. How can online advertising platforms defend against adversarial examples?

Online advertising platforms can implement various defense mechanisms to mitigate the risks posed by adversarial examples. These measures include robust online fraud detection systems, regularly updated security protocols, enhanced anomaly detection algorithms, and continuous monitoring for suspicious ad placements or targeting patterns.

6. Can adversarial attacks impact the performance of digital marketing campaigns?

Yes, adversarial attacks can impact the performance of digital marketing campaigns. By manipulating ad creatives or targeting parameters, attackers can decrease campaign effectiveness, waste ad spend on irrelevant impressions, or harm the brand’s reputation by associating it with inappropriate content.

7. How can advertisers protect their campaigns against adversarial examples?

To protect campaigns against adversarial examples, advertisers should partner with reputable advertising networks or agencies that follow industry best practices in ad security. Regular monitoring of campaign performance, open communication with advertising partners, and staying up-to-date with security research can also help in preventing and addressing any potential attacks.

8. Can advertisers detect adversarial attacks on their campaigns?

Detecting adversarial attacks on campaigns can be challenging, but certain signals can indicate their presence. Unexplained changes in ad performance metrics, sudden fluctuations in targeting accuracy, or unexpected ad placements are potential signs that an adversarial attack may be occurring.

9. What steps can advertisers take to report adversarial attacks?

Advertisers should report any suspected adversarial attacks to their advertising network or platform immediately. They should provide detailed information about the issue, including campaign IDs, affected ad units, and any associated suspicious patterns or characteristics. Timely reporting helps the platform identify and investigate potential threats more effectively.

10. Do adversarial attacks only target large advertising networks?

No, adversarial attacks can target any advertising network, regardless of its size. Attackers may exploit vulnerabilities in smaller networks to test their techniques or take advantage of less advanced security measures. It is essential for all advertising networks, regardless of their size, to implement robust security measures.

11. Can adversarial examples be used to inflate ad click-through rates?

Yes, adversarial examples can be used to inflate ad click-through rates artificially. Attackers can create deceptive clicks that imitate genuine user interactions, which can lead to misleading reporting metrics and misinformed campaign optimizations.

12. What role does machine learning play in mitigating adversarial attacks?

Machine learning can play a crucial role in mitigating adversarial attacks. By integrating anomaly detection algorithms, machine learning systems can identify and block malicious or misleading inputs. Additionally, ongoing research in the field of adversarial machine learning helps develop advanced defense mechanisms against evolving attack techniques.

13. How can advertisers ensure their ad placements are not compromised?

Advertisers can ensure their ad placements are not compromised by partnering with reputable, well-established advertising networks that adhere to strict security protocols. Regular communication with the network’s security team, leveraging fraud detection technologies, and using whitelisting measures for ad placements can help in maintaining a secure ad environment.

14. Are there any regulations in place to address adversarial attacks in advertising?

Currently, there may not be specific regulations focused solely on adversarial attacks in advertising. However, existing regulations such as data privacy laws and guidelines on ad transparency indirectly contribute to protecting against malicious activities, including adversarial attacks.

15. How important is collaboration among advertisers and platforms in combating adversarial attacks?

Collaboration between advertisers and platforms is critical in combating adversarial attacks. Advertisers should actively engage with their advertising partners to share insights and address any security concerns. Collaborative efforts, such as sharing data on suspicious activities or participating in threat intelligence sharing programs, can collectively strengthen defenses against adversarial attacks.

Conclusion

In conclusion, the concept of adversarial examples in the physical world poses a significant threat in the realm of online advertising and digital marketing. This article shed light on the potential consequences and challenges that advertisers and marketers may face due to the existence of these physical adversarial examples. Key points and insights discussed included the vulnerability of machine learning algorithms and the increasing sophistication of attacks, the potential impact on brand reputation and customer trust, and the need for robust defenses to mitigate these risks.

One of the primary takeaways from this article is the vulnerability of machine learning algorithms to physical adversarial examples. These examples exploit the inherent weaknesses and limitations of these algorithms, leveraging their inability to differentiate between legitimate inputs and carefully crafted malicious ones. As online advertising heavily relies on machine learning algorithms for tasks such as image recognition and content categorization, the susceptibility to adversarial examples raises concerns about the integrity of the entire advertising ecosystem. Advertisers and marketers must acknowledge this vulnerability and invest in robust defense mechanisms to ensure the trustworthiness of their advertising content and campaigns.

Furthermore, the increasing sophistication of attacks highlighted in the article demonstrates the need for constant vigilance and proactive defense strategies. Adversarial examples in the physical world have evolved from simple abstract patterns to real-world objects that can deceive both humans and machines. This poses significant challenges for advertisers and marketers who strive to deliver authentic, engaging content to their audiences. The potential impact on brand reputation and customer trust cannot be underestimated. A successful attack could result in the dissemination of false information or offensive content under the guise of a legitimate advertisement, tarnishing the brand image and eroding customer confidence.

To mitigate the risks posed by adversarial examples, the article emphasized the importance of adopting multiple defense strategies. These include robust adversarial training to enhance the resilience of machine learning algorithms, integrating human judgment and verification in the advertising process, and collaborating with security experts to identify and address vulnerabilities. In addition, continuous monitoring and analysis of advertising campaigns can help detect any suspicious or manipulated content, allowing for timely mitigation measures.

Overall, the existence of adversarial examples in the physical world poses a significant challenge for online advertising and digital marketing. Advertisers and marketers must recognize the vulnerability of machine learning algorithms and the potential consequences of attacks. By investing in robust defense mechanisms and proactive strategies, they can mitigate these risks and maintain the integrity of their advertising content and campaigns. Staying ahead of the evolving landscape of adversarial examples is crucial in ensuring the trustworthiness and effectiveness of online advertising and digital marketing efforts.